The More, The Better? Active Silencing of Non-Positive Transfer for Efficient Multi-Domain Few-Shot Classification

Xingxing Zhang1

Zhizhe Liu2

Weikai Yang1

Liyuan Wang1

Jun Zhu1

1Tsinghua University

2Beijing Jiaotong University

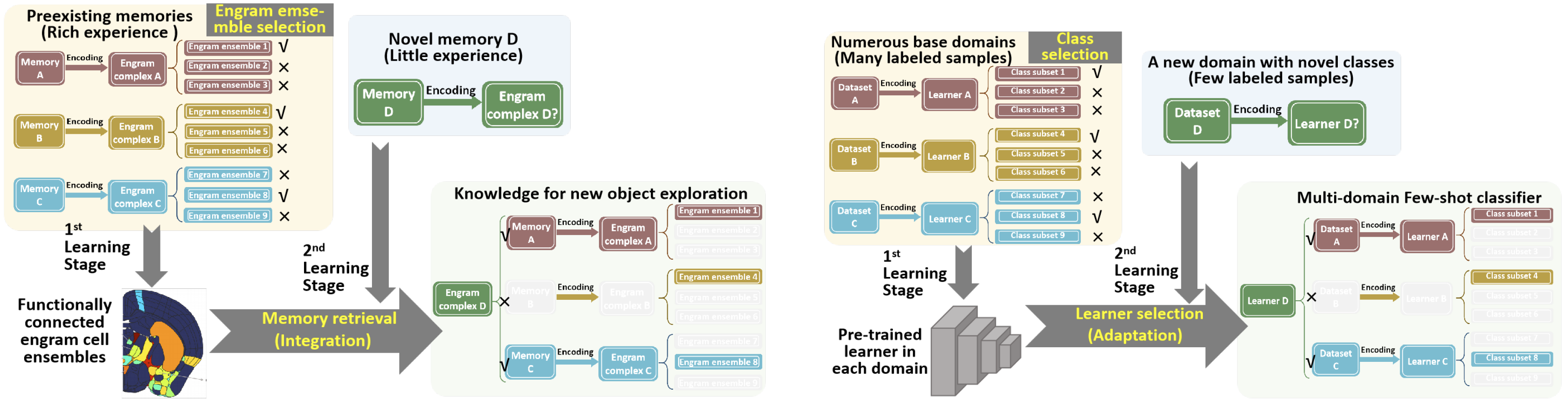

Few-shot classification refers to recognizing several novel classes given only a few labeled samples. Many recent methods try to gain an adaptation benefit by learning prior knowledge from more base training domains, aka. multi-domain few-shot classification. However, with extensive empirical evidence, we find more is not always better: current models do not necessarily benefit from pre-training on more base classes and domains, since the pre-trained knowledge might be non-positive for a downstream task. In this work, we hypothesize that such redundant pre-training can be avoided without compromising the downstream performance. Inspired by the selective activating/silencing mechanism in the biological memory system, which enables the brain to learn a new concept from a few experiences both quickly and accurately, we propose to actively silence those redundant base classes and domains for efficient multidomain few-shot classification. Then, a novel data-driven approach named Active Silencing with hierarchical Subset Selection (AS3) is developed to address two problems: 1) finding a subset of base classes that adequately represent novel classes for efficient positive transfer; and 2) finding a subset of base learners (i.e., domains) with confident accurate prediction in a new domain. Both problems are formulated as distance-based sparse subset selection. We extensively evaluate AS3 on the recent META-DATASET benchmark as well as MNIST, CIFAR10, and CIFAR100, where AS3 achieves over 100% acceleration while maintaining or even improving accuracy.